|

Course : Statistical Inference

Participants : BSc Mathematics and Data Science Institution : Sorbonne University Instructor : Dr. Tanujit Chakraborty Timeline : September, 2022 to December, 2022 Sessions : 60 Sessions Email: [email protected] |

Course Introduction:

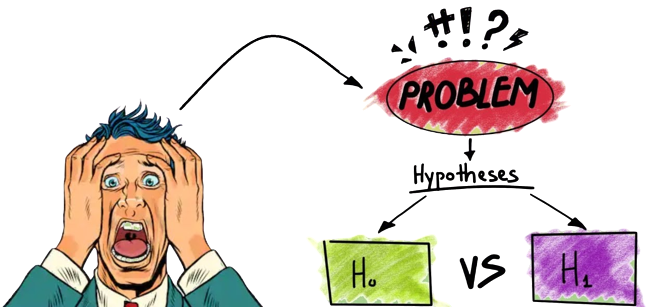

The course is a classical advanced course in (parametric) Mathematical Statistics. General theory of point estimation is presented within which the notions of unbiasedness, consistency and optimality are studied. A particular attention is given to maximum likelihood estimation. The next topics to be treated are confidence intervals and hypothesis testing. The course ends with an account on the linear model. In the framework of the course, a series of computer labs will be run on the following topics: simulations of random variables (by inversion, rejection sampling and by the Box-Muller transform) and Monte-Carlo methods in Statistics.

Course Objectives:

The course will help the students by:

- Prove Glivenko-Cantelli Theorem and assess its fundamental character within Mathematical Statistics.

- Determine the laws of order statistics and of functions of order statistics.

- Infer the laws of the main statistics built from a gaussian random sample, including such the chi-squared statistic and the T-statistic.

- Articulate the notion of a dominated statistical model as well as that of unbiased and consistent estimator. Construct minimum variance unbiased estimator by the Rao-Blackwell/Lehmann-Scheffé procedure and efficient estimators in the sense of Cramér-Rao.

- Prove efficiency properties of Maximum Likelihood Estimators.

- Construct exact and asymptotic confidence intervals, likelihood ratio tests, and goodness of fit tests (chi-squared and Kolmogorov-Smirnov).

- Construct least-squared estimators in the framework of the general linear model and prove their efficiency properties.

- Test generalized linear hypotheses on parameters of linear models.

- Represent ANOVA as a linear model and perform statistical inferences in these models.

- Computer Lab Classes using RStudio.

Evaluation Components:

The evaluation components for the Statistical Inference (SI) course will be as follows:

1) Mid Term Test-1 : 15% ; 2) Mid Term Test-2 : 15% ; 3) Project Work : 20% ; 4) End Term Test : 50%.

1) Mid Term Test-1 : 15% ; 2) Mid Term Test-2 : 15% ; 3) Project Work : 20% ; 4) End Term Test : 50%.

Textbooks & References:

• Rice, John A. (2006) Mathematical statistics and data analysis, Cengage Learning (Very Interesting Textbook)

• Casella, George, and Roger L. Berger (2002). Statistical inference, Cengage Learning (Nice Textbook)

• Wasserman, Larry (2004). All of statistics: a concise course in statistical inference, Springer.

• Shao, J. (2003). Mathematical Statistics, Springer.

• Shao, J. (2005). Mathematical Statistics: Exercises and Solutions, Springer.

• Casella, George, and Roger L. Berger (2002). Statistical inference, Cengage Learning (Nice Textbook)

• Wasserman, Larry (2004). All of statistics: a concise course in statistical inference, Springer.

• Shao, J. (2003). Mathematical Statistics, Springer.

• Shao, J. (2005). Mathematical Statistics: Exercises and Solutions, Springer.

Some Very Interesting Papers For Reading :

I would recommend all the participants to go through these research articles (mostly non-mathematical) along with the course. Please click on the paper name to view these outstanding and interesting paper:

1. Statistics - What are the most important statistical ideas of the past 50 years? (2021)

2. Data Science - 50 Years of Data Science (2017)

3. Statistics Vs Data Science - The science of statistics versus data science: What is the future? (2021)

4. Statistics Vs Machine Learning - Prediction, Estimation, and Attribution (2020)

5. Future - The future of statistics and data science (2018)

1. Statistics - What are the most important statistical ideas of the past 50 years? (2021)

2. Data Science - 50 Years of Data Science (2017)

3. Statistics Vs Data Science - The science of statistics versus data science: What is the future? (2021)

4. Statistics Vs Machine Learning - Prediction, Estimation, and Attribution (2020)

5. Future - The future of statistics and data science (2018)

Lecture Notes:

Class notes, Tutorials, Slides, data and R Codes will be available at github.com/ctanujit/MATH350